AI Publications

Some thoughts about Artificial Intelligence

You find in this section articles, white papers, thought, quotes, etc. that are related to AI.

#12 AI Data Quality: Crap in – Crap out

AI Data Quality – Any AI project is based on data used to train the model. Unlike what we would imagine, getting the right data in the right shape is far from easy or obvious. Building a quality dataset is an engineering work. This paper covers the various steps of this job.

#11 AI: Fixing the Training gone Wrong

Building on Paper #10’s AI training pitfalls—underfitting (too lazy), overfitting (too rigid), high bias (skewed guesses), and high variance (wild swings)—this paper offers practical fixes for our smell detector. We explore three levers: boosting network capacity, extending training with more epochs, and enriching data for smarter learning.

Sylvain LIEGE has been certified AWS Certified AI Practitioner.

We are please to share that Sylvain LIEGE has been certified by AWS as AWS Certified AI Practitioner.

#10 AI Training going wrong

This paper explores why the model might fail in practice: underfitting (too simplistic), overfitting (too rigid), and the underlying issues of bias and variance. Through examples, we show how underfitting leads to random guesses , while overfitting causes oversensitivity. We introduce bias (consistent errors) and variance (prediction variability).

#9 AI Training & Back Propagation

AI Training & Back Propagation – In order to use a Digital Neural Network, we need to train it. In this paper we present how we can “train” one using supervised training and backpropagation. By comparing the model’s output with the value that we know to be correct, we can tune the parameters and make it solve the problem at hand.

#8 – AI Forward Propagation

AI Forward Propagation – AI Neural networks mimic the neural network of the brain. In this paper we present what is happening inside a digital neural network from data entry to result. We study the various mathematical steps in their simplest format to allow global understanding of the inside mechanisms. The end-to-end process is called Forward Propagation.

#7 – Artificial Intelligence : Architecture: Neural Network Design

Artificial Intelligence : Architecture: Neural Network Design – AI Neural networks mimic the neural network of the brain. Once the technical architecture has been built, how does each component work? We present the various mathematical component in action.

#6 – Artificial Intelligence: Digital Neural Network Architecture

Neural Network Architecture – AI Neural Networks mimic the neural network of the brain. But how do build a digital neural network? What is its architecture? We present the basic component of such technical solution.

#5 – AI: Neural Network Principles – from Biology to Digital

AI: From Biology to Digital.

AI Neural networks mimic the neural network of the brain. What are the principles driving a neural network? How did we look at biology to create the most powerful “machines” ever created?

#4 – AI & Mathematics: Differential Calculus

AI & Mathematics: Differential Calculus – We explore the mathematical marvels used in AI to create systems that can “predict” the future.

#3 – Artificial Intelligence & Mathematics: Algebra

AI Mathematics Algebra – Algebra is an essential mathematical enhancer for AI. It allows to represent the real world in mathematical structures that can be easily manipulated by the computer.

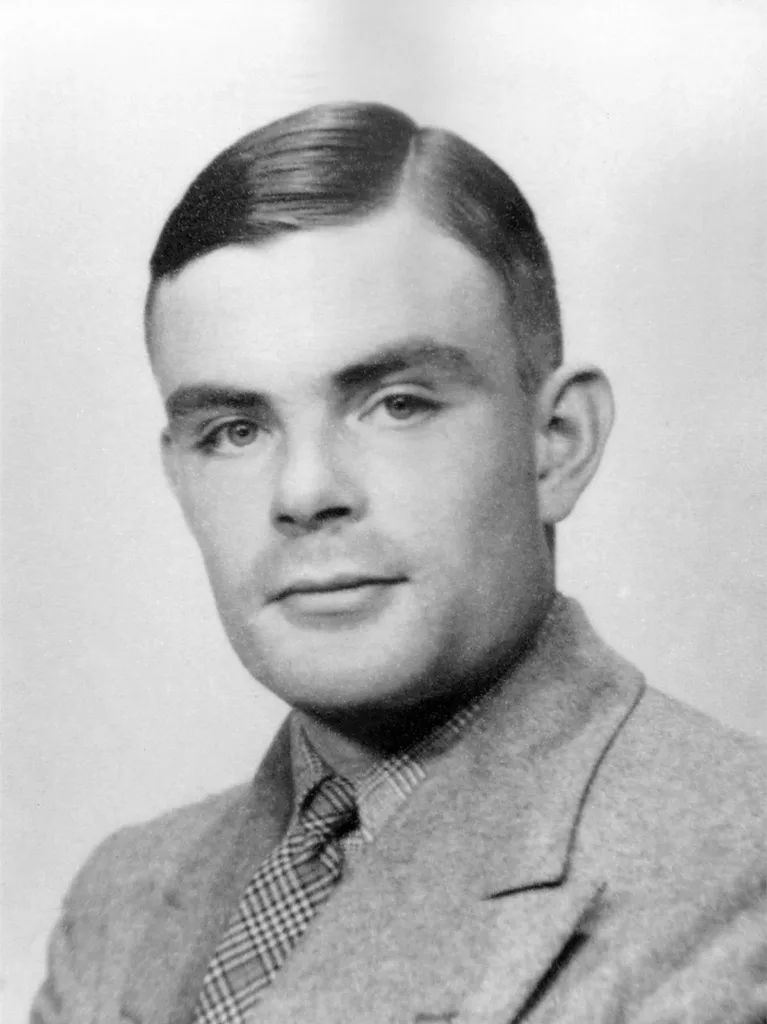

#2 – Artificial Intelligence Origins

Artificial Intelligence Origins: If it almost never is possible and usually unfair to associate a crucial human event to only one person, it is also impossible to not name the Great Alan Turing when we think about AI.