#11 Artificial Intelligence: Fixing the Training gone Wrong

Summary

Topic: AI Architecture : Neural Network Training Fixing

Summary: Building on Paper #10’s training pitfalls—underfitting (too lazy), overfitting (too rigid), high bias (skewed guesses), and high variance (wild swings)—this paper offers practical fixes for our smell detector. We explore three levers: boosting network capacity, extending training with more epochs, and enriching data for smarter learning.

We recommend reading the previous articles in the series for an easier understanding.

Keywords: AI; Neural Network; Machine Learning; Deep Learning; Overfitting; Underfitting; Bias; Variance; Regularization; Data Augmentation; Model Complexity; Epochs; Validation

Author: Sylvain LIÈGE

Note: This Paper was NOT written by AI, although AI might be used for research purposes.

- #1 - The Hunt for Artificial Intelligence

- #2 - AI Origins

- #3 - AI Mathematics Algebra

- #4 - AI & Mathematics: Differential Calculus

- #5 - AI: From Biology to Digital

- #6 - Artificial Intelligence: Digital Neural Network Architecture

- #7 - AI Architecture: Neural Network Design

- #8 – AI Forward Propagation

- #9 -AI Training & Back Propagation

- #10-AI Training going wrong

1 Introduction

Training an AI model is an expensive exercise. From gathering data, labelling them, engineering the parts that matter, creating balanced sets, etc. It is a complex and often costly endeavour. In our previous paper #10 we have studied what can go wrong when training an AI model, i.e. this is where your costs will go up! Up! Up! Unfortunately, these are very common issues and need to be handled accordingly to avoid disaster when using the model in production. In this paper we will see what the sources are and how to fix these issues. From improving the size and quality of the training dataset, to revisiting the nature of the information sent to the model, via tweaking the model’s complexity to adjust the precision of the learning, we will cover most common solutions to fix a training gone wrong. Not all solutions have the same cost or the same timeframe. Running a couple more epochs costs almost nothing when setting up a GAN to create artificial data will have much more serious cost implications. Businesses should be aware of the most efficient way to fix a problem when it happens.

There will be almost no mathematics involved and the paper is accessible to all audiences. Hurray!

2 Recap on the model’s intent

One could think that defining the purpose of an AI model is fairly simple. “Detect edible food from smell” is simple enough and we all understand what it means. But if we believe we understand what it means, it is also because our human brain can adapt to the context quickly and efficiently. In fact, those who do not adapt to the context of life have a serious tendency of dying at a young age. Rigidity has never been a sign of longevity.

The problem with that in IT is that a software system does not naturally adapt to circumstances. Or at least, in 2025, this is not fully achieved and when it is, it is because of painstaking hard work to makethe system adaptable.

In our case, we are building a rather simple AI system and are not working yet at the level of the Grok, ChatGPT, Gemini and the lot. We are building a “simple” edible food detector. It therefore means that we need to think of our intent very carefully to achieve a specialised system that does the expected job.

We have presented the possibility to use our food detector in two very different cases: the mundane case of our civilized kitchens, where we want the system to be rather picky to avoid using an unpleasant milk, a too old egg or an “explosive” cheese. We also have the survival case where we want the system to tell us everything that we can eat without dying. Moulded bread, when starving is much more appetizing than when receiving friends for New Year’s Eve. The previous “explosive” cheese will taste like a treat if a matter of life and death.

In the civilized kitchen, you want a picky attitude. In the survival kit, you want a very pragmatic system. One maintains your tasting pleasure high while the other maintain your odds of staying alive high.

To achieve the correct result, we need to train our system properly. Unfortunately, things do go wrong, as we have seen in Paper #10. Fortunately, as we will see in this paper, we know how to fix it.

3 The Dreyfus Model of Skills Acquisition

Developed in the 80s by Stuart and Hubert Dreyfus, this model outlines five stages of skill acquisition: Novice, Advanced Beginner, Competent, Proficient, and Expert. It’s widely used in education, psychology, and even AI to describe how humans (or systems) progress from rule-based learning to intuitive expertise.

I will use this framework to illustrate the various defaults our AI model could have after it has been trained and what it means to be correctly trained. Here are the 5 stages of skills acquisition and how they match in the AI world.

3.1 Novice (Know Nothing)

- Dreyfus description: Follow strict rules without proper understanding of the context.

- Overfitting/Underfitting: Severe underfitting, the model knows little and cannot learn patterns.

- Bias/Variance: High bias and low variance: consistently wrong.

3.2 Advanced Beginner (Discover the Problem)

- Dreyfus description: Notices patterns but still rigid and lacks the broader context.

- Overfitting/Underfitting: Mild underfitting, the model starts learning but misses general patterns.

- Bias/Variance: High bias with higher variance than in novice stage: often wrong but can be right as well.

3.3 Competent (Understand Your Own Ignorance)

- Dreyfus description: Aware of the gaps and has plans to improve; can apply rules within the context.

- Overfitting/Underfitting: Balanced but fragile, can underfit or overfit quickly depending on tuning.

- Bias/Variance: Balanced bias (captures most patterns) but with higher variance (sensitive to noise).

3.4 Proficient (Know the Rules Extremely Well)

- Dreyfus description: Masters the rules and sees patterns fluently. This is still deliberate and not intuitive.

- Overfitting/Underfitting: Risk of overfitting. The model fits the training too well and struggles to generalize.

- Bias/Variance: Low bias and high variance.

3.5 Expert (Derive/Break Rules for the Good of the Solution)

- Dreyfus description: Intuitive and adaptive, can break the rules when needed and also innovate.

- Overfitting/Underfitting: Balanced, the model generalises well and adapts to new data.

- Bias/Variance: Low bias and low variance, the model is stable on new data.

3.6 The Dreyfus model and AI

In this paper we will introduce how to fix underfitting, overfitting, bias and variance. The table below presents the symptoms, the problem and the fix in a nutshell.

Stage | AI Behaviour | Problem | Fix |

Novice | Always wrong, guesses randomly, like a new chef unable to judge smells. | Severe underfitting, high bias, low variance: consistently wrong predictions. | Add complexity (more layers/neurons), increase epochs, enrich data. |

Advanced Beginner | Notices some smell patterns but often wrong, like a trainee chef with limited skills. | Mild underfitting, high bias, slightly higher variance: misses general patterns. | Add moderate complexity, more epochs, improve data. |

Competent | Recognizes many smell patterns but fragile, can fail on new smells, like a chef aware of gaps. | Balanced but risks underfitting or overfitting, moderate bias, higher variance. | Fine-tune complexity, epochs, and data; monitor validation accuracy. |

Proficient | Excels on trained smells but struggles with new ones, like a chef stuck on known recipes. | Overfitting, low bias, high variance: memorizes training data, poor generalization. | Simplify model, reduce epochs, increase/add data (GANs), reduce features. |

Expert | Adapts to new smells reliably, like a master chef or survivalist. | Balanced, low bias, low variance: generalizes well, stable on new data. | Maintain balance with validation, adjust complexity/data as needed. |

4 Fixing Underfitting: When the model is lazy

4.1 Symptoms

A model is underfitting when the output it produces are somewhat relevant. It feels more like the flip of a coin than an educated guess, or …the educated part is very poor. And indeed, that is exactly what is happening. An educated guess is as good as the education. If your education is based on a few special cases, anecdotes and hearsays, then I would not trust you with my life on detecting edible food, no more than I would trust you for designing the perfect New Year’s Eve dinner.

4.2 Causes

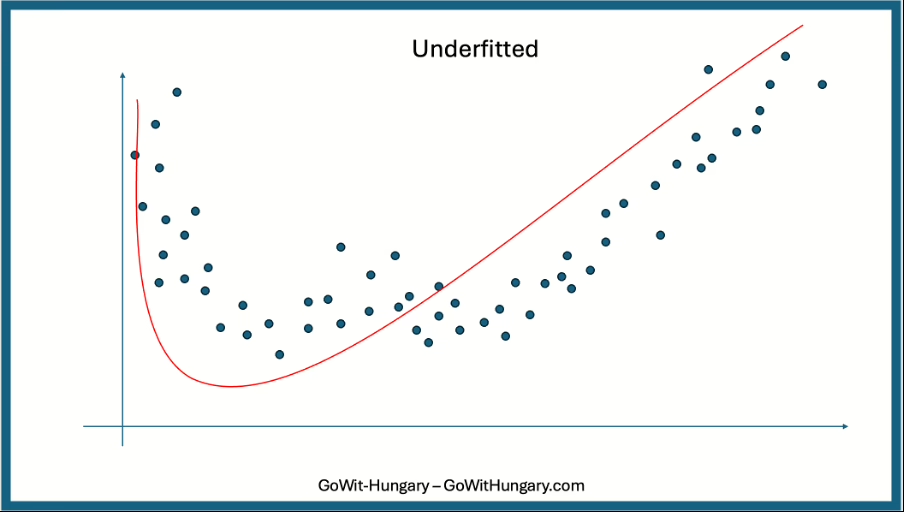

Generally speaking, the root cause is an overly simple model. The training has not gone far enough and/or the model’s structure is unable to deal with the complexity of the problem.

As we will see, the structure of the model and the way it is trained are absolutely key to get the desired result out of it. Abraham Maslow captured a popular wisdom in his 1966 book The Psychology of Science: A Reconnaissance and wrote: “I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.” That is very well applying to AI and training AI models. To get it right, the system must be able to recognise nails, but also what is not a nail and learn to use many different tools to predict correctly what to do in a specific situation.

Our underfitted system knows nails, …more or less. We need to teach it more and teach it better.

4.3 Solutions

What can we do to increase the accuracy of our system? We have three main tools to make our system better:

- We can add complexity to increase the brain power

- We can train the model longer to improve its raw knowledge

- We can train the system with richer information to make the knowledge more accurate

4.3.1 Adding complexity

The concept of complexity is the most interesting one. In paper #5, I present how we mimic the human brain by constructing a digital neural network structured like the human brain. Of course, it is an extreme simplification of the human brain, but the concept remains true. We established that in a human brain, “an adult has typically 86 billions of neurons, each neuron has between 1 000 and 10 000 synapses. So, it is said that we have between 100 and 1000 trillions of synapses.” With today’s technology we are not even remotely capable of replicating this architecture. So, we do what we do best: simplify! But sometimes, we simplify too much.

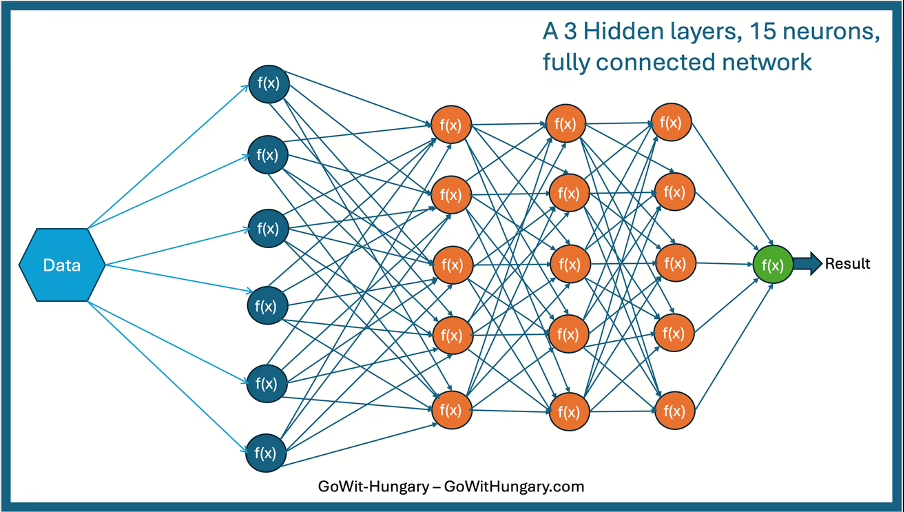

The digital neural network design is basically made of three elements: the number of layers, the number of neurons in each layer and the number of synaptic connections.

To illustrate our point, we will need some modesty. We cannot visualise a digital neural network by today’s standard, so what we will describe as “complex” would be very simple in reality, but you will get the point and can extrapolate later.

So, let’s imagine, we create a network with 6 entry points, 3 hidden layers of 5 neurons each, fully connected (each neuron is connected to each neuron of the next layer).

The general function of a neuron is:

Where:

- a_1, a_2… are the inputs (activations from the previous layer, or raw inputs for the first layer).

- w_1, w_2,… are the weights (one per input connection, the “synapses” from Paper #8).

Such a solution would create:

Weights: 85 (from connections: 6×5 + 5×5 + 5×5 + 5×1 = 30 + 25 + 25 + 5).

Biases: 16 (one per neuron in hidden and output layers).

Total Parameters: 85 + 16 = 101.

This 101 number could be named the network’s capacity.

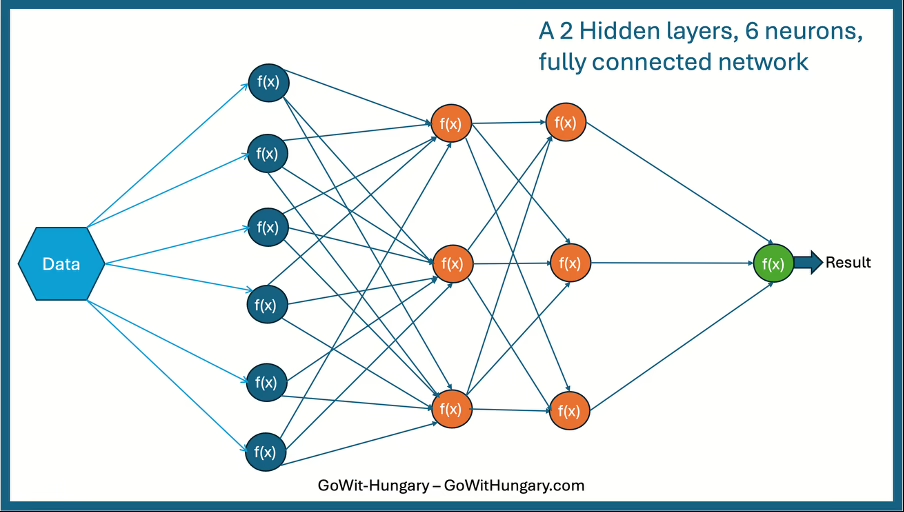

But, of course, we could architecture our system more simply by reducing the number of layers or the number of neurons. For the sake of visualising the potential, let’s draw two simpler solutions:

This new solution will have :

- Weights: 6×3 (18) + 3×3 (9) + 3×1 (3) = 30.

- Biases: 3 + 3 + 1 = 7.

- Total: 37 parameters. Capacity = 37

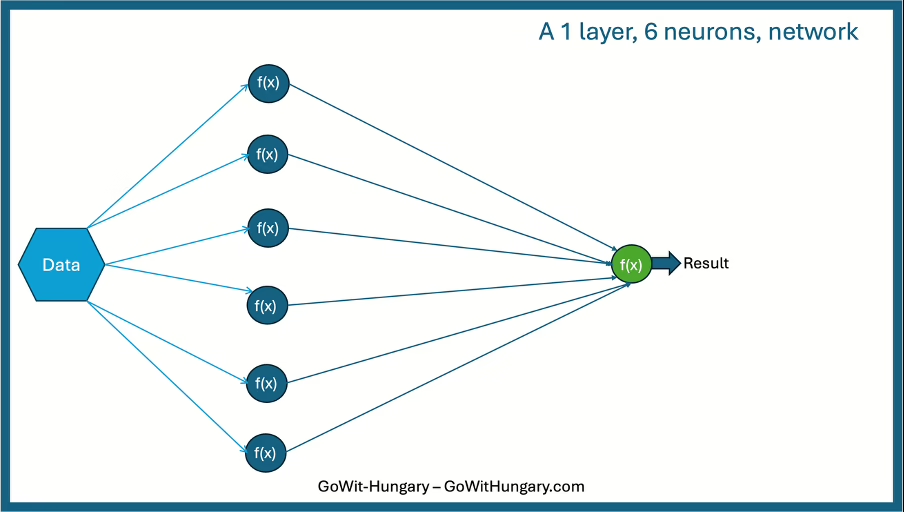

And of course, we could go dead simple with a radically simpler solution like: 6 inputs straight to 1 output, no hidden layers:

- Weights: 6×1 = 6.

- Bias: 1.

- Total: 7 parameters. Capacity = 7

In this simplest case, we have 6×1 (6) = 6 weights + 1 bias = 7 parameters, i.e. a capacity of 7.

So, what can we do when we have a system that is lazy or almost dumb? We can increase the capacity level of the network. In general terms, it means adding layers and neurons. Having more parameters to work with, it will be more refined and more capable of finding extra information, extra patterns from the data submitted during training.

But this is only one part of the possible solution…

4.3.2 Extending the training: more epochs and better data

Like a human, a digital AI system is learning from “experience”. A human will get 2 sources of training: the academic training from another human being and field training where errors will be made, and you learn the hard way.

In our example so far, we have used the easiest to understand training, which is “supervised training”. This is about saying to the system: process this and if you get it wrong, we will help you because we know the answer. Easy to grab, easy to explain. There is another type of training called “unsupervised training”. This one will be closer to the field experience the human being would acquire. We will have a chance to explain that marvel in another paper. In the meantime, we will cope with the academic training, a.k.a supervised training.

If you learn exclusively in an academic way, your capability to deal with new situations is totally dependent on the quality of what your teachers have taught you. If you are learning History of Empires and your teacher happens to have a thing for the Macedonian empire, you are likely to be better at Macedonia history than at Roman history. And if you intend to be an all-around historian, you will be in trouble. You can go to the History exam, but when all your references to develop your question around the Roman empire are related to the Alexander the Great, your examiner might not give you your diploma. Your knowledge is shaky, too focused on a special area, even if you do have some knowledge of other periods, it is not enough. To get your exam, you need to do better, you need to study more the other periods and the other empires. Only when you will have done that will you be able to go and start recognising patterns that are common to all empires and only then will you connect the dots that are hidden to make sense of the facts. Only then will you be able to pass your History of Empires exam.

Of course, it is also possible that during your academic training, your teacher was not biased by a love of the Macedonian empire. He was absolutely faire with all empires, but it was you who did not study these empires enough. You did study them but superficially. You know all the empires but not well enough to get the bigger picture. You know many anecdotes, a few dates, some characters, but you lack depth. How was Julius Cesar inspired by Alexander? How come the mighty roman empire could fall at the hands of “barbarians”? here again, you will fail the exam.

This is basically the same for AI. We can suffer from a too shallow knowledge of the data or suffer from a poor quality of the data. So, what can we do?

The easiest to solve is the too poor knowledge of the data, the equivalent of the superficial learning. Allow me to remind you how the training was working as in Paper #9:

We have a set of data that will be fed to the model. Say 100 data to keep things simple. For each data in this set, we

- Enter the data in the model

- Observe the output

- Calculate the Loss

- Modify the model accordingly to the Loss

- Go to step #1 with the next piece of data

The whole round is named an epoch. One epoch is equivalent to learn once from each data. It’s a bit like you reading your whole history lesson once.

Every time, we run an epoch, the system is learning more from the data. Every time, its knowledge is getting deeper. It’s like you with every revision you will have on your whole history lesson. The more you read your course and go through the material, the better you are at it. So, if your knowledge is too shallow, you should work more on your course. It is the same for our model, if its knowledge is too shallow, we can run more epochs to train it. The good news is: this is very easy to do! We can run a huge number of epochs while going home and sleep, the computer will do that very well for us.

The question now is: why not running plenty of epochs in the first place? Good point and we’ll get there a bit later.

So, solution #1: run more epochs. The system is becoming far better with the data, and it should be better at doing its job. At the minimum, the problem of underfitting should be addressed.

Regarding the first problem of your teacher being in love with the Macedonian empire, the problem is tricker. It means that what you have been taught is not large enough for the goal you have. You want to become a Historian of Empires and to become so, you need to train on all empires. In this case, you need to tune the courseware. You need to adjust the content and make it more generic.

It will be the same for AI. In a case like that you need to find better data to train from. And this can be much trickier to fix than running more epochs. You need to find more data sources and you need to make sure that these data are indeed better for the job. In practical terms, this is harder work, even for the AI specialist.

If we place ourselves in the context of a business training an AI system to help it customers, if the system is fed with a subset of the problems that can arise, then you are underfitting. And if you happen to not have any more data available to train your system, you are doomed. But this as well will be the topic of another paper dedicated to the quality of the data.

So, to summarize, to improve the training of an underfitted system, you can study the data better (more epochs) or you can improve the data themselves.

4.3.3 Features engineering

So, we can increase the capacity of the network, we can spend more time on the data, and we can even make the data better quality. There is still another angle that we can work on: the features.

The features are the elements of the data that we decide to extract and send to our model. As a matter of fact, data are rarely clean from the start. If we work on our food detector again, we have identified 7 parameters that seem fairly natural to use:

- Smell Type

- Intensity

- Concentration

- Pleasantness

- Familiarity

- Duration

These are the features of our current system. But in fact, we could imagine adding some more like:

Source temperature: Temperature of the smell’s origin (e.g., cold = 5°C, warm = 25°C, hot = 50°C).

Why Add It: Temperature affects volatility and perception—cold cheese smells milder, hot bread stronger. In survival mode, warm roots might signal freshness; in civilized mode, cold sour milk flags spoilage. It’s a physical trait tied to smell chemistry, not redundant with Intensity (strength at detection) or Concentration (density).

Volatility (Evaporation Rate): How fast the smell disperses (e.g., low = thick cheese, high = fleeting floral), measurable via chemical properties or proxy (vapor pressure).

Why Add It: Volatility shapes the smell experience—slow-release odours linger (overlap with Duration), fast ones fade. Survival mode might link “high volatility earthy” to safety; civilized mode flags “low volatility sour = lingering bad milk.” It complements Concentration (amount) and Intensity (strength)—as a distinct signal.

Adding new “features” to the data we send to the system is likely to make the system smarter faster. It is like us humans getting more data to work from before making a decision, it’s a common strategy for key decision making.

Saying that, “the perfect is the enemy of the good” and no-one wants to be overwhelmed with data either. Data are good as long as they contribute to the solution. It is not always the case. In our case, let’s imagine that at the start we wanted to be very thorough and we decided to send as much data as we can to our model. Data like:

- Time of day: We could think it is good because we, as humans, experience a different set of smells in the morning than in the afternoon.

Why it is noise: smell’s edibility is not tied to clock time. In survival mode, an edible root is edible any time of the day. In civilised mode, sour milk is bad any time of the day. - Colour of the smell source: Sounds like a good idea since green food might be better than brown one, for instance brown cheese could be dodgy.

Why it is noise: We are building a smell detector not a visual one. On top of that colour is likely irrelevant since, in reality, veggies have all sorts of colours and brown cheese can be delicious (believe the French man here).

This is why “Features Engineering” is all about deciding what type of data is really relevant for our problem. Most often, when the system is underfitting, we need to enrich the features by adding new ones. But sometimes, we are better off with features reduction to remove the noise that is stopping the system from recognising relevant patterns. Like any decision maker, we want a lot of data, but we want relevant data. Wrong data are taking our focus away from solving the problem.

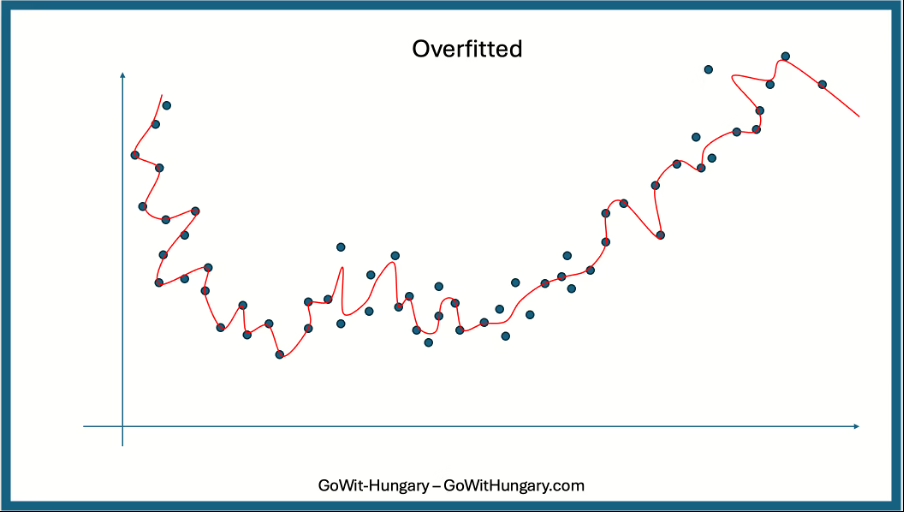

5 Fixing Overfitting: When the model is rigid

5.1 Symptoms

The model performs brilliantly on the training data but poorly on new data. A good model is a model that can recognise “patterns”. It is essential to understand that. It is like our Empire History student. We want him to understand Empires in general and be able to recognise a new empire when he sees one. To do that, the student would need to recognize various common characteristics of an empire. It could be things like:

- Centralized Power and Leadership Structure

- Expansion through military conquests

- Culture assimilation

- Decline through overextension and internal strife

If our system is overfitting, it will recognise the leadership structure of the training data but not any new one; it will recognize the military conquests style of say Alexander but not the Persian Empire, etc. It is specific to the data used to train!

Fixing overfitting ensures the smell detector saves costs by avoiding false positives in food waste.

5.2 Causes

Generally speaking, the causes are the mirror of the underfitting causes. The model is so “smart” that it has learnt the training data almost by heart. But then, it is unable to generalise. It is like when someone is trying to create a solution to a problem and starts to “over-engineer” it, trying to be too smart for his own good.

5.3 Solutions

I will not present all the possible actions but focus on the ones easiest to understand. The point of this paper not being to teach you how to do it but give you a good overview of how we can fix a training going bad.

5.3.1 Reduce model complexity

This is the mirror of the action we could take on the underfitting case. Since the complexity of the model allows it to retain the smaller details of the world it is training on, remembering too many details is in the end creating noise. This noise stops the model from being able to generalise what it has learnt. Consequently, by reducing the complexity of the model, we can reduce the hyper-specialisation acquired by the model on the training set.

5.3.2 Features reduction

A mirror again to the underfitting solutions. Like a human being trying to make a decision while overwhelmed by irrelevant data, reducing the amount of data to consider can indeed increase the quality of the decisions. But here, we are not talking about reducing the number of examples the model will learn from, but reducing the granularity of the data sent to the model. If we want to caricature, entering the age of the chef, or the weather of the day into the system to detect how edible is a food is just making things complicated and can reduce the quality of the decision for no reason. So, we could, with a model receiving too many pieces of data as input, remove or combine data to eventually enter less information to decide from. When we reduce the model complexity, we reduce the capability to memorise noise. By reducing the features, we enter less noise to remember.

5.3.3 Reduce epochs

When we train a model, we go through the training data a certain number of times. The more we go through it, the better the model becomes with it, until it knows the training data too well. So, this might be a little counter-intuitive, but training the model less can result in better capability to adapt to new situations. So, too much training can be just that: too much. This is a very easy and cheap way to act against overfitting. Reducing epochs saves training time, cutting costs for businesses deploying the smell detector.

5.3.4 Increase training data

We have established that overfitting is knowing the training data too well. One way to correct that issue is to increase the size of the training data set. Indeed, if the training set is too small, it is likely that the model will remember it very quickly. It needs more data, more variety, good balance, etc. So, one way to create that is to increase the training data set size. If you are learning about food but the training focuses too much on cheese and with only 10 different cheeses, then you model will become good at these 10 cheeses. So, providing a larger and more varied set will definitely help.

5.3.5 Data augmentation

Increasing the training data set size is easy to say but not necessarily easy to do. Fortunately, we have solutions for that. One of them is data augmentation by using GANs (Generative Adversarial Networks).

In GANs, two neural networks—the generator and the discriminator—work in opposition:

- The generator creates synthetic data samples (e.g., new smell profiles for our smell detector) from random noise, attempting to mimic real data.

- The discriminator evaluates whether the samples are real (from the true dataset) or fake (from the generator), pushing the generator to improve.

This adversarial process generates realistic augmented data, which can enhance our smell detector’s training set, helping combat overfitting by introducing diverse, plausible smell variations (e.g., new combinations of intensity or pleasantness). Now, we also must emphasize that GANs are not magical. Handled improperly, they will generate poor quality data. But it’s another story for another paper.

I find it quite amazing that we have managed to create a system where we produce data to train an AI model. AI is training AI. How marvellous! …or not?

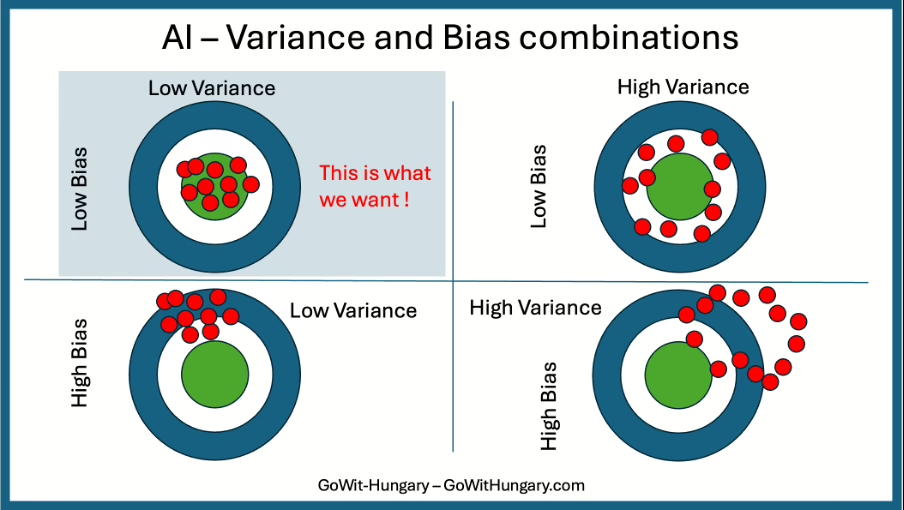

6 Fixing Bias and Variance: When the aim is wrong

Figure 6: Variance and Bias

Actually, Bias and Variance mostly come from underfitting and overfitting. So, most of the fixes listed above are valid here. The job consists in finding the right balance between all the various elements.

There is one more action that can be undertaken, though: assessing the intrinsic quality of the training data set. Indeed, if for any reason, the set has been badly calibrated and shows some intrinsic bias, this bias will necessarily show during the live use of the model. In order to fix that, several tools exist that you can perform on your data prior using them for the training. For instance, in the AWS world, this tool is named “Clarify”. It analyses the bias of the data and would alert you before you start training your model. It is probably very wise to use such a tool at the start, considering the price and effort required to train an AI model.

If your training set presents signs of bias, you can use a GANs (see above Data augmentation) to generate complementary data that would balance the overall data set. It is a rather convenient way to fix this problem that can be cheaper than gathering more data from your field and feed the training set.

7 Where is the Intelligence?

Just like for a human, training can go wrong. Fixing training issues makes AI reliable, saving businesses from costly errors like discarding safe food or approving spoiled ingredients.

Fortunately, the AI industry has developed a long list of tools to both identify the issues and fix them. Of course, AI is often involved in fixing it own problem, but we can also see that the humans have to make a lot of decisions, considering the number of options available. If you have say 5 ways to fix a problem (more training, more data, better data, etc.), you have 5! = 5x4x3x2x1 = 120 possible variations. It’s a lot and going through all of them could be prohibitively expensive. So, you’d better have an AI engineer available to guide you in the forest of options.

The solutions, we have presented in this paper are everything but “intelligent” in the cognitive sense of the term. It is a lot of cleverness from the researchers who have come with all these techniques, I give you that, but in itself, the AI model we are building still has no intelligence built in. So, we must keep looking…